from nbdev.config import get_configCorefunctions

Corefunctionality for data preparation of sequential data for pytorch, fastai models

5.3 Spectrogram-Datablock

TensorSpectrogramOutput

TensorSpectrogramOutput (x, **kwargs)

A Tensor which support subclass pickling, and maintains metadata when casting or after methods

TensorSpectrogramInput

TensorSpectrogramInput (x, **kwargs)

A Tensor which support subclass pickling, and maintains metadata when casting or after methods

TensorSpectrogram

TensorSpectrogram (x, **kwargs)

A Tensor which support subclass pickling, and maintains metadata when casting or after methods

project_root = get_config().config_file.parent

f_path = project_root / 'test_data/WienerHammerstein'

hdf_files = get_files(f_path,extensions='.hdf5',recurse=True)seq_spec = TensorSpectrogramInput(HDF2Sequence(['u'],to_cls=TensorSpectrogramInput)._hdf_extract_sequence(hdf_files[0],r_slc=2000))

seq_spec.shapetorch.Size([2000, 1])Sequence2Spectrogram

Sequence2Spectrogram (scaling='log', n_fft:int=400, win_length:Optional[int]=None, hop_length:Optional[int]=None, pad:int=0, window_fn:Callable[...,torch.Tensor]=<built-in method hann_window of type object at 0x7fa5c7f36c20>, power:Optional[float]=2.0, normalized:bool=False, wkwargs:Optional[dict]=None, **kwargs)

calculates the FFT of a sequence

SpectrogramBlock

SpectrogramBlock (seq_extract, padding=False, n_fft=100, hop_length=None, normalized=False)

A basic wrapper that links defaults transforms for the data block API

dls_spec = DataBlock(blocks=(SpectrogramBlock.from_hdf(['u','y'],n_fft=100,hop_length=10,normalized=True),

SequenceBlock.from_hdf(['y'],TensorSequencesOutput)),

get_items= CreateDict([DfHDFCreateWindows(win_sz=2000+1,stp_sz=10,clm='u')]),

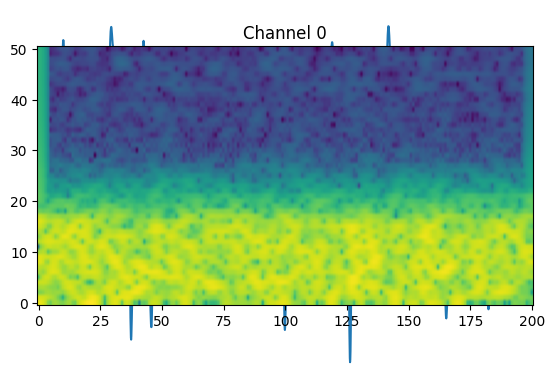

splitter=ApplyToDict(ParentSplitter())).dataloaders(hdf_files)dls_spec.one_batch()[0].shapetorch.Size([64, 1, 51, 201])dls_spec.show_batch(max_n=1)